Families are the foundational building block of Manor Lords. Without them, you can’t do anything at all in-game, so it’s very important that you try to keep them happy at all times. With that in mind, here’s how to increase Approval in Manor Lords.

Manor Lords Approval Explained

To keep your families happy in Manor Lords, you’ll need Approval. Your Approval rating is indicated by the numerical percentage in the upper corner of the screen, and it affects the following:

- Whether new families can join your settlement (you’ll gain one new family per month at 50% Approval)

- Whether families leave your settlement

- Whether families resort to crime and banditry

- Determines the morale of your militia

Do be warned that once a family resorts to banditry, they turn into an enemy bandit unit that you’ll then have to attack and put down. This is one of the worst things that can happen in Manor Lords, so I’d suggest doing everything in your power to prevent your settlement from reaching that point.

One other thing to note is that your Approval rating will also determine the rate at which new families leave or join your settlement, as shown below:

| Approval Rating | Families Joining or Leaving |

|---|---|

| 75-100% | 2 families join each month |

| 50-74% | 1 family joins each month |

| 25-49% | No change |

| 0-24% | 1 family leaves each month |

Ways to Increase Approval in Manor Lords

The good news is that there are a few things you can do to increase your Approval rating in Manor Lords. The game isn’t very good at actually telling you what you need to do, but if you find that your Approval is dipping, you can do the following to get it back up:

- Ensure that there are no homeless families wandering around

- Store all unsheltered supplies properly

- Meet the Amenities and Market Supply requirements for your burgage plots

- Make sure there’s variety in your marketplace stalls

We’ll go over each method in more detail down below.

How to Shelter the Homeless

The answer to this one is pretty simple. If you have homeless people wandering around in Manor Lords, this will affect morale and lower your Approval rating. To address this issue, all you need to do is build a burgage plot for them to move into. Make sure you’ve got at least one unassigned family unit to get construction going.

Shelter Your Supplies

This is an easy mistake to make especially early on in the game. Whenever your crafting and gathering stations are full on storage, the resources and food they gather will become unsheltered.

To fix this, make sure you have enough granaries and storehouses to store them, or they’ll get ruined. Granaries are for food items, while storehouses are for other generic items. You’ll also want to make sure you have one family unit assigned to the granaries and storehouses to help speed up the logistics.

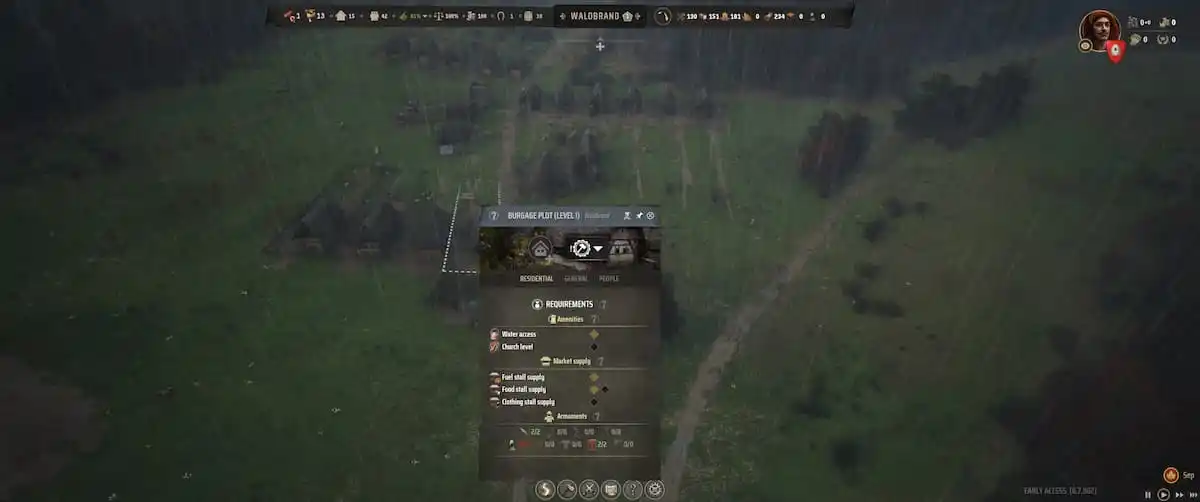

Amenities and Market Supply Requirements

Next up, to increase your Approval rating even further, click on a burgage plot to check if their Amenities and Market Supply requirements have been met. Every burgage plot needs access to water and the church. The former is easy enough to handle; just build a well anywhere in the region, and make sure to build it over underground water.

For the church, you’ll need to convert timber into planks, which you can do with a sawmill. Once you have enough planks, build a church to fulfill this requirement.

As for the Market Supply requirements, you need to build a marketplace somewhere in the region where assigned families can set up stalls to sell excess goods. This requirement needs you to supply food, materials, and clothing in the marketplace, and you should be able to meet this naturally as you continue to farm for resources in the region.

Having Variety in the Marketplace

Just having different stall types in the marketplace in Manor Lords isn’t quite enough. You also need to make sure that the individual stalls have a variety of goods for sale. For instance, the food stall shouldn’t just be selling berries; there should be meat and other types of food items available as well.

This isn’t something you really need to worry about till much later in the game, but it is something to keep in mind. Variety will come naturally as you expand into other regions and also make use of the trading routes.

And that’s everything you need to know about Approval in Manor Lords.