BitLife has a variety of expansion packs available for consumers to purchase, and some are greater than others. Let’s find out which packs you should purchase to make your adventures in this life-simulator more exciting.

The Best Packs to Buy in BitLife

You’ll find my favorite picks for BitLife content packs below, alongside a few reasons why I think they’re the best around and well worth your purchase.

The Bitizen Pack

Coming in at $7.99, the Bitizen pack is one of the most essential packs for players who plan on spending a lot of time here. If you’ve been playing recently, there’s a chance you’ve noticed the sheer number of ads that pop up. This pack will eliminate ads for good, making it almost a necessity to enjoy the game at this point.

You’ll also unlock some extra features, such as the ability to interact with your teachers and bosses, making the role-playing aspect deeper than ever before. If you’re looking for something interesting that also makes the game flow feel better, Bitizen is the pack for you.

God Mode Pack

Now, if you’re hoping to push things even further, the God Mode pack is another excellent addition to the game. You can purchase a bundle of both Bitizen and God Mode for $12.99, or you can purchase God Mode at a later time for $7.99. I would recommend just getting them both at once to save a few extra bucks that you can use on another Expansion or Job Pack.

Related: How to Get a Cunning Ribbon in BitLife

God Mode makes things all the more interesting — especially since you can tweak just about anything to be exactly as you’d like it to be. You never have to fret about the little things again, now that you can customize your run exactly to your specification.

Boss Mode Bundle Pack

If you’re hoping to make the most out of your BitLife experience, then you’re going to need to go to work. Thankfully, if you find fun in the grind, there are plenty of alternate jobs available. No matter if you want to be an Actor, a Musician, or anything in between, the Boss Pack is the best thing around. You can pick up this pack for $15.99 so you don’t need to purchase jobs individually.

The best part about this pack is the fact that you’ll receive all future Jobs at no additional cost. While it may feel like a Macrotransaction to purchase this for $15.99, it’s much better than buying individual sets for $4.99 a pop.

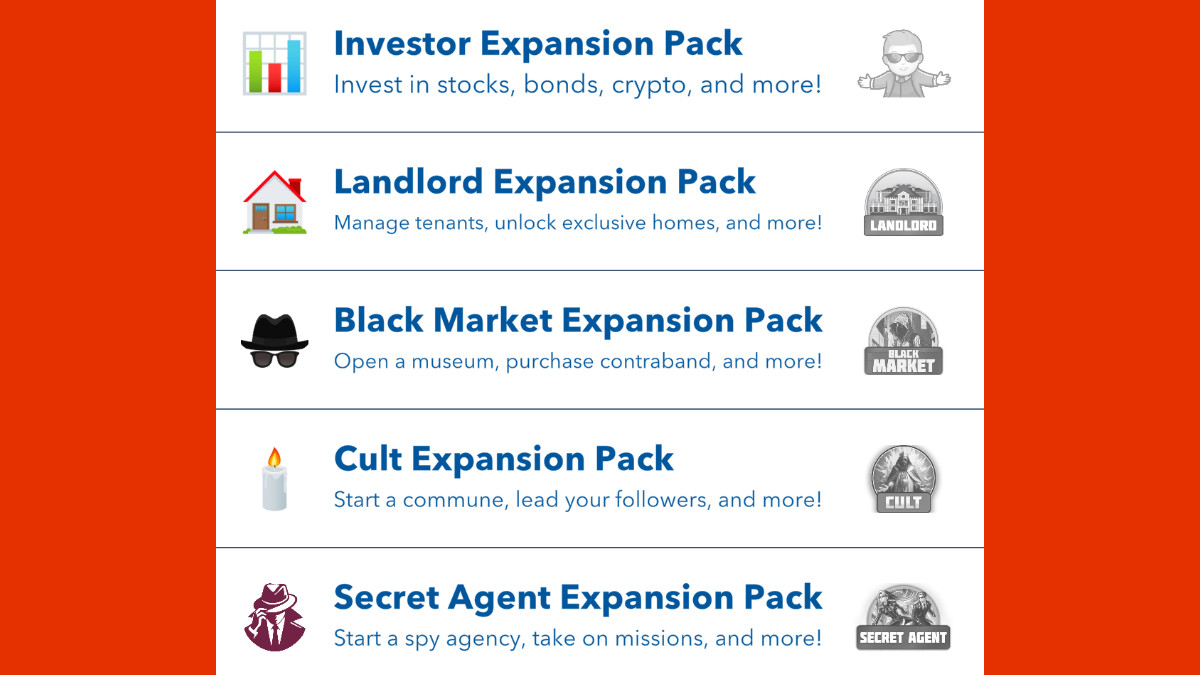

Expansion Packs in BitLife

BitLife fanatics can get their hands on a variety of Expansion Packs for $4.99 a piece. But, which one is perfect for you? It’s really hard to say — each of the Expansions provides something different for everyone. Looking to become a Spy? Then purchase the Secret Agent Expansion Pack. Want to lead a Cult? Well, there’s something here for you, too.

Related: What a Prenup Does in Bitlife, Answered

Consider how you want to play, and what you’re considering doing in your next BitLife run before you purchase one — or multiple, really — of these expansion packs. While there is no offer like The Boss Package for these Expansions, you can slowly trickle your way through their content and purchase another down the line.

The Challenge Vault Add-On

Weekly Challenges are one of the most exciting parts of BitLife, but they’re gone as quickly as they arrive. If only there was a way that we could access all of the previous Challenges that have been in the game for $4.99. Oh wait, there is — the Challenge Vault add-on does exactly that.

No matter if you’re ready to take on the GTL Challenge or want to go back to the start of these weekly events, you can do so with this particular add-on. Just make sure that you’ve got plenty of time before jumping in — there are a lot of previous challenges to do.

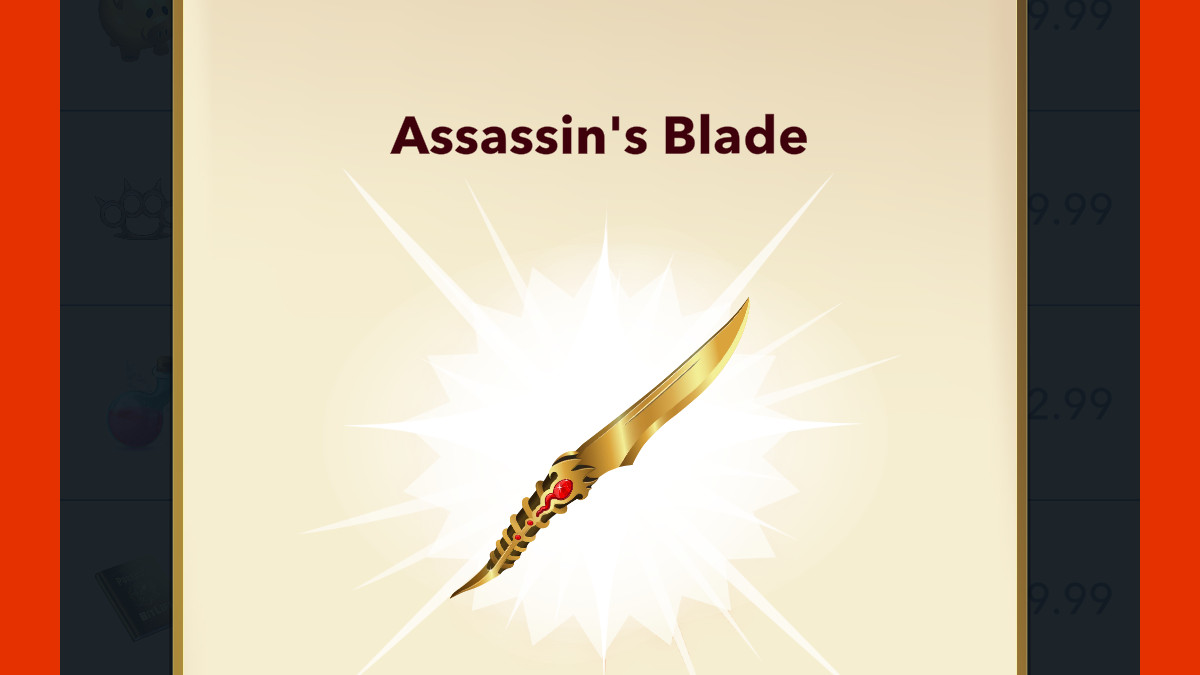

The Assassin’s Blade Add-On

Look, I won’t judge how you play BitLife if you don’t judge how I play it. There are plenty of different Crimes that players can commit, but no matter if you’re aiming for the Cunning Ribbon or you’ve just got a dark side to you, the Assassin’s Blade is one of the best add-ons in the game. While it does cost $9.99, it will allow you to get away with Murder every time you use it.

If you’re planning on being Jack The Ripper 2.0 in your upcoming playthrough, this is going to be the perfect add-on for you. You can hack and slash your way to victory without needing to worry about the police ever finding you. How busted is that?

While there are plenty of fantastic packs available, these are just a few of them overall. Be sure to check out the BitLife marketplace and see what may tickle your fancy and be your favorite pack of all time.

BitLife is available now on iOS and Android.