Updated: April 19, 2024

Found a new code!

Recommended Videos

Run faster than the wind to the finish line with your favorite anime characters backing you up. As the winner, you’ll have the chance to hatch new heroes and visit new worlds. If you feel like you can’t catch up, Anime Racing 2 codes will help you progress faster!

All Anime Racing 2 Codes List

Anime Racing 2 Codes (Working)

- UPDATE1: Use for 350 Emeralds (New)

- 1MVisit: Use for 300 Emeralds

- Release: Use for 200 Emeralds

Anime Racing 2 Codes (Expired)

- There are currently no expired Anime Racing 2 codes.

Related: Midnight Chasers Highway Racing Codes

How to Redeem Codes in Anime Racing 2

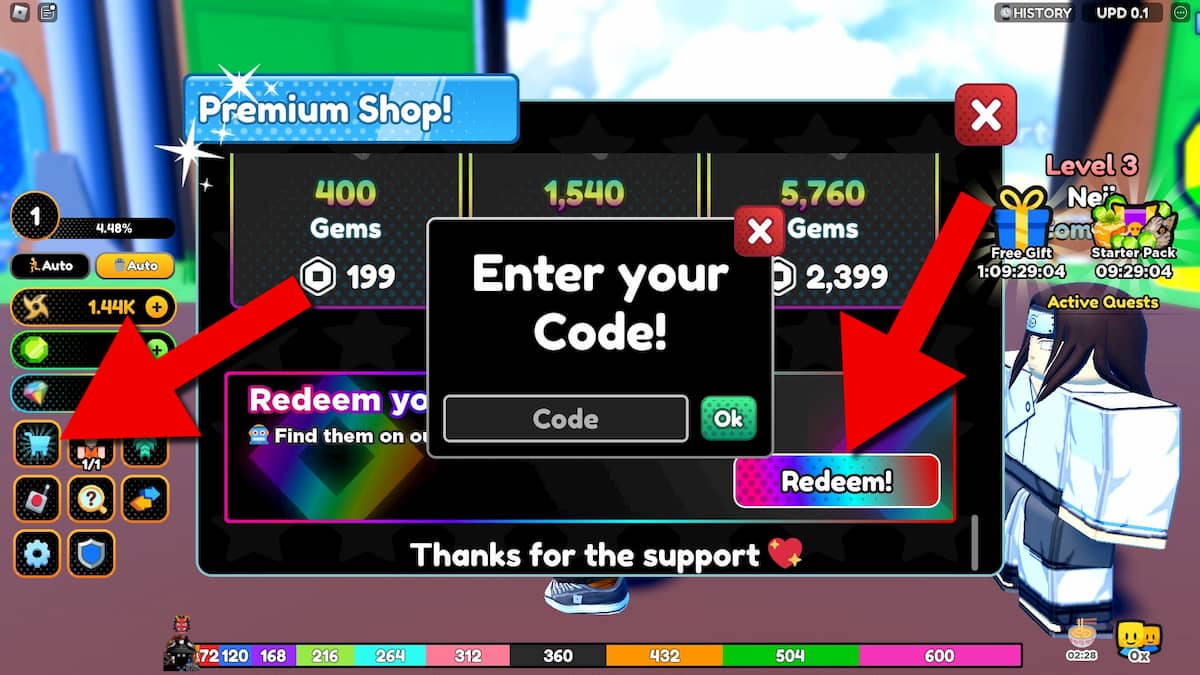

Redeeming Anime Racing 2 codes is simple—check our step-by-step guide:

- Run Anime Racing 2 in Roblox.

- Click on the shopping cart icon in the menu on the left.

- Scroll all the way down to find the code redemption feature.

- Click on Redeem to open the codes pop-up box.

- Use the Code field to input a working code.

- Click on Ok to grab your freebies!

If you’re looking for more wacky racing Roblox titles, check out our articles on Get Fat and Roll Race codes and Race Clicker codes to grab all the free rewards before they’re gone!

The Escapist is supported by our audience. When you purchase through links on our site, we may earn a small affiliate commission. Learn more